Engineering the Future: Why We Built Dataverses for the Agentic Era

As we navigate the complexities of the modern enterprise, we often find ourselves in a paradoxical state: we are drowning in data but starving for intelligence. While the promise of the data lakehouse—a unified architecture for both structured and unstructured data—was intended to solve this, the reality has been far from simple. Many organizations are currently forced to stitch together a disparate collection of tools, from Apache Kafka for streaming to Apache Spark for batch processing. This fragmentation creates significant operational overhead, delays insights, and ultimately widens the gap between our data and the AI solutions that rely on it. To solve this complexity crisis, I am proud to introduce Dataverses: a single, unified platform designed to make building data lakehouse and AI solutions a reality—quickly, easily, and at scale.

From Operational Overhead to No-Ops Engineering

The primary hurdle in any data strategy is the pipeline itself. Traditional approaches have historically required deep expertise in distributed systems and the constant management of complex clusters. We have engineered Dataverses to revolutionize this process through a no-code workflow. By abstracting away the infrastructure, we provide:

- Zero Operational Overhead: The platform manages all underlying services, eliminating the need for dedicated teams to manage Kafka, Kafka Connect, or Spark Structured Streaming.

- Drag-and-Drop Complexity: Users can build sophisticated Change Data Capture (CDC) pipelines using a simple interface, turning what used to be a coding-intensive task (Scala, Python) into a streamlined process.

- Immediate Velocity: Real-time data is no longer a "future goal"; it is an immediate reality. Users can deploy a pipeline and see real-time data in a dashboard instantly, shifting from legacy batch-centric operations to a continuous flow of information.

- Elasticity by Design: Scalability is automatic and built directly into the platform, removing the need for manual tuning of distributed systems.

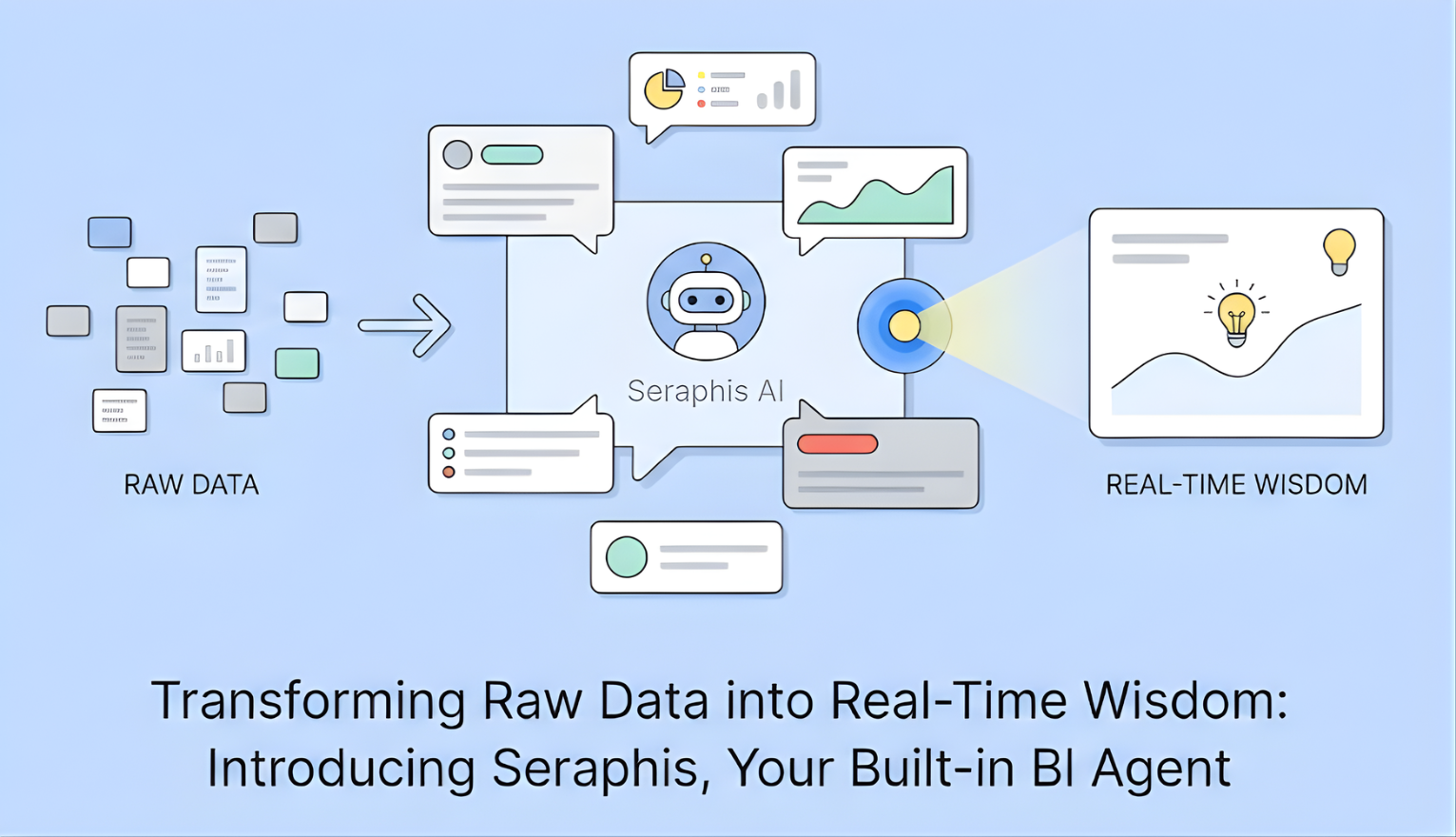

Democratizing Data Intelligence

A platform's value is defined by its ability to convert raw data into actionable intelligence. Dataverses provides a dual-threat environment for intelligence:

- For Data Scientists: A seamless environment to build custom machine learning solutions directly on unified lakehouse data.

- For Business Analysts: We have removed the barrier to entry with natural language data exploration. By using natural language queries in a notebook, analysts can transform complex SQL into intuitive conversations, allowing teams to gain deep-dive insights in seconds rather than hours.

Grounding the Agentic AI Frontier

We are entering the Agentic AI Era, where autonomous software agents perform complex tasks and make decisions based on corporate data. However, for an agent to be useful, it must be dependable. Dataverses offers a dedicated environment to build custom Agents for specific use cases—from customer service automation to internal process optimization. Because these agents are grounded in the organization's "single source of truth" within our architecture, they provide reliable agent responses. We are moving AI beyond the experimental prototype stage and into mission-critical, dependable applications.

Building the Future, Today

Dataverses is more than just a storage layer; it is a complete Data and AI ecosystem designed for the speed of modern business. By unifying data engineering, intelligence, and AI development, we are empowering organizations to unlock their full potential without the burden of legacy system management. We invite you to stop managing clusters and start driving innovation. You can now build your data lakehouse and AI solutions in days, not months.

Explore the Dataverses Platform and Start Your Free Trial Today!