Scale Operations with Autonomous AI Agents

Deploy intelligent agents that connect your data, automate workflows, and drive growth autonomously.

The Dataverses

Next-Gen Intelligence Platform

Empowering your organization to build and deploy AI-driven solutions at scale.

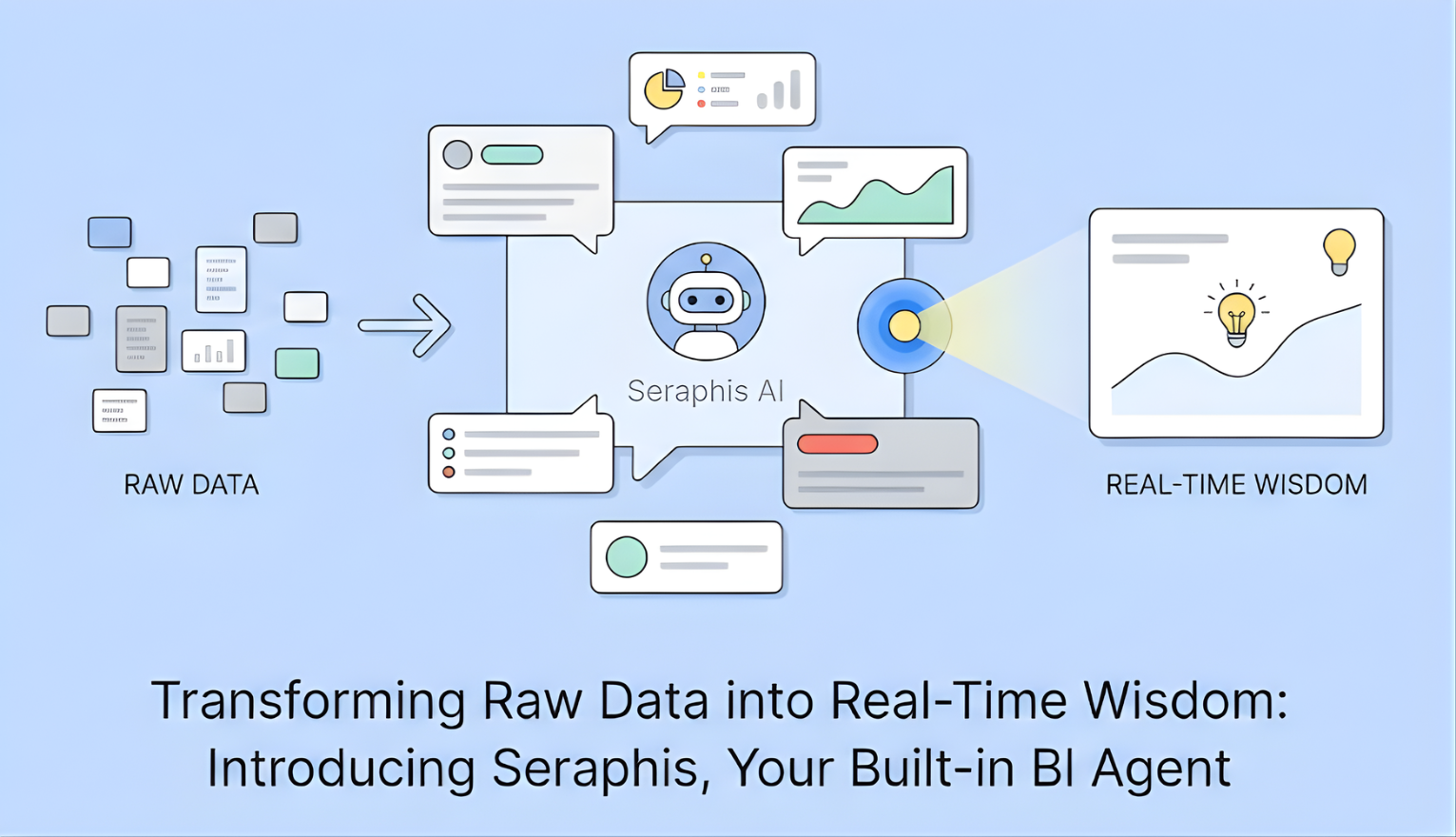

Real-Time Intelligence

Unlock instant insights from streaming data without sacrificing accuracy or governance. Process events as they happen and act in the moment.

Democratize Data Access

Empower every team member to explore and analyze data using intuitive tools and natural language, bridging the gap between data and decision-makers.

Reduce Complexity

Consolidate your data stack into one unified platform. Eliminate silos, reduce maintenance overhead, and significantly cut infrastructure costs.

Streaming Data Lakehouse + AI

Enabling your organization to create and launch AI-powered solutions that scale.

From Stream to Lakehouse, Seamlessly

Transform streaming data into structured lakehouse storage with declarative pipelines. Simple YAML configurations that automatically scale, monitor, and maintain complete data lineage from source to destination.

Frequently Asked Questions

Everything you need to know about Dataverses and how it can transform your data analytics workflow

Dataverses is a low-code, unified platform designed to simplify data management and intelligent automation. It functions as a managed streaming data lakehouse that integrates Apache Kafka, Spark, and Flink to process data in real-time, enabling businesses to move from raw data to intelligent insights with sub-100ms query latency.

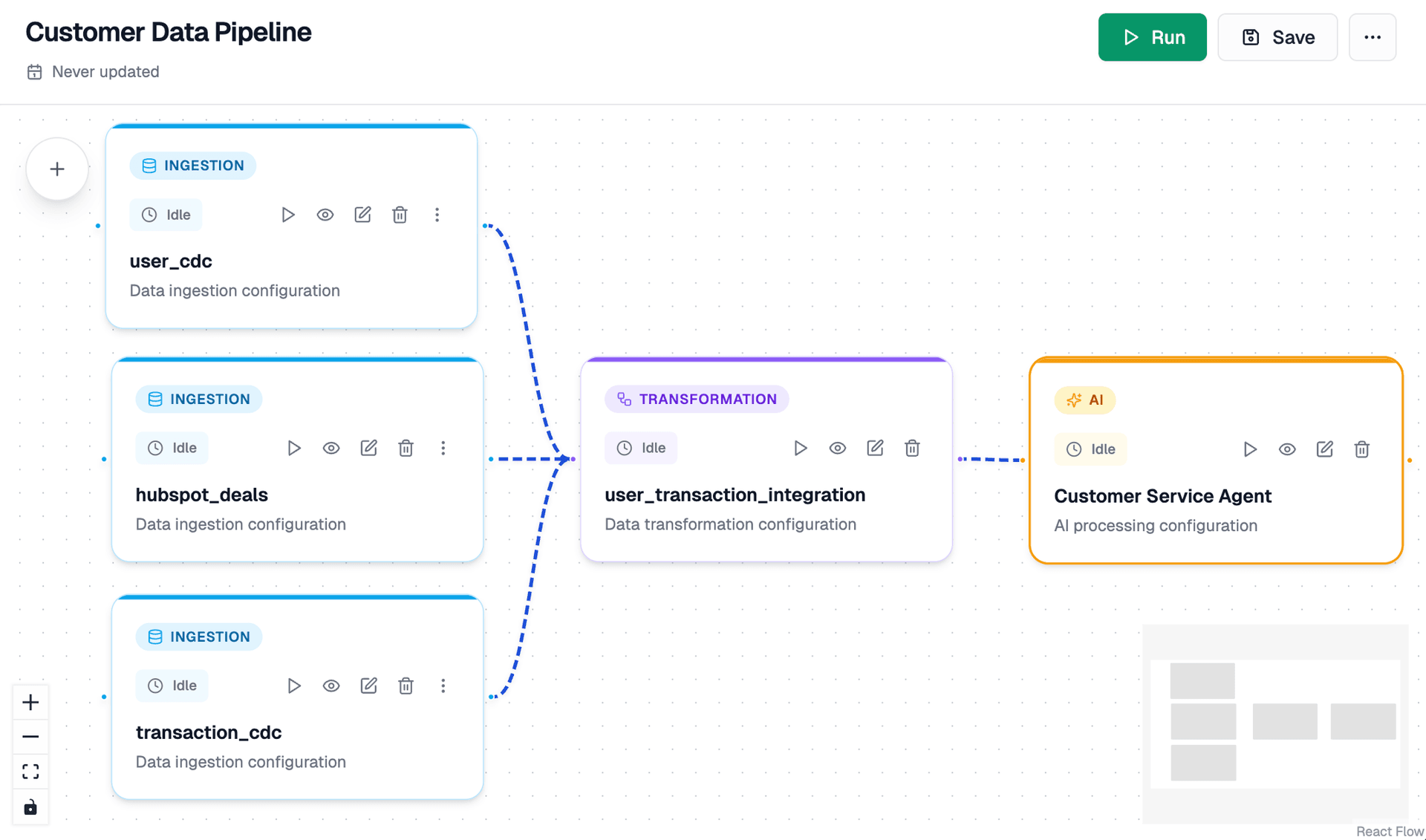

The Agent Studio is a dedicated environment where users can build, monitor, and deploy autonomous agents directly on live lakehouse data. It features an intuitive drag-and-drop canvas for designing multi-step agentic workflows and allows for production-ready deployment with a single click.

The platform provides natural language querying, automated insight generation, and no-code model training. This democratizes agent and model development across both technical and business teams.

Dataverses supports over 50+ data sources, including databases (PostgreSQL, MongoDB), cloud services (AWS, GCP), and message queues. It utilizes Zero-ETL CDC (Change Data Capture) Ingestion to automate data capture without the need for manual pipeline development.

The architecture provides a unified storage layer that combines the flexibility of a data lake with the ACID compliance of a data warehouse. It is purpose-built to handle late-arriving events and high-performance AI/agent workloads.

Yes; Dataverses is built to process billions of events daily. It features automated scaling for its enterprise-grade Kafka infrastructure and processing engines, maintaining high performance regardless of query load.

Organizations can choose between cloud-hosted or on-premises deployment. Dataverses provides 24/7 enterprise support, dedicated customer success managers, and comprehensive API documentation to ensure operational reliability.

The platform is designed for "radical simplification," allowing teams to configure end-to-end architectures through intuitive UIs. This enables the launch of production-ready data systems in minutes rather than weeks, with zero operational overhead.

Discover Dataverses Resources

Stay updated with the latest insights, best practices, and technical deep-dives from our data engineering and analytics experts.

Ready to Transform Your Data Pipeline?

Start building streaming data applications today. Get up and running in minutes with our cloud platform or deploy on-premises.

No credit card required • 14-day free trial • Cancel anytime